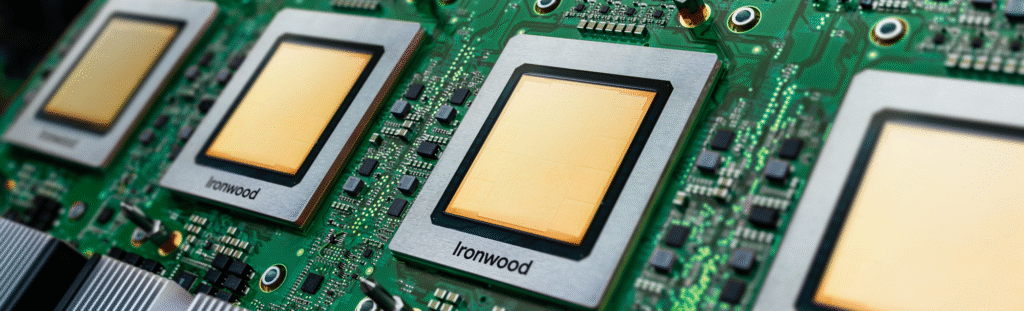

Google Ironwood TPU Explained: What It Is, How It Works, and Why It Matters for the Future of AI

Artificial intelligence is evolving faster than ever, and companies need hardware capable of running massive AI models with high speed and efficiency. To meet this demand, Google has introduced Ironwood, its seventh-generation Tensor Processing Unit (TPU). This chip is designed for one purpose: powering large-scale AI workloads with exceptional performance.

Many users are confused at first and ask:

“Is Ironwood a CPU or RAM?”

The answer is straightforward: Ironwood is neither. It is a dedicated AI accelerator built to process advanced machine learning and inference tasks at extreme scale.

This article explains what Ironwood is, how it works, its real-world use cases, and why it matters for businesses, cloud engineers, and AI developers.

What Is Ironwood?

Ironwood is a TPU (Tensor Processing Unit), a specialized processor built by Google specifically for artificial intelligence and large-scale machine learning.

Ironwood is not:

a CPU

system RAM

a traditional GPU

Ironwood is:

a custom AI processor

designed to accelerate large models like LLMs and generative AI systems

part of Google’s AI supercomputer infrastructure

optimised for inference performance

It is built to run modern AI models such as large language models, agent-based systems, image generators, and complex enterprise AI applications.

Why Ironwood Is Not a CPU or RAM

Not a CPU

A CPU handles general-purpose computing tasks such as running applications and operating system processes. It is flexible, but not designed for the heavy mathematical operations required in AI workloads.

Not RAM

RAM is temporary system memory used by regular applications. It is used for everyday tasks and is not fast enough for large AI models.

Ironwood as an AI Processor

Ironwood is an AI accelerator that includes:

a high-performance TPU chip

ultra-fast HBM3E (High Bandwidth Memory) attached to the chip

a high-speed interconnect system to connect thousands of TPUs together

Its design focuses solely on accelerating AI computations.

Key Technical Features of Ironwood

Ironwood delivers a major leap forward in speed, performance, and scalability.

1. High AI Processing Power

Ironwood executes complex tensor operations significantly faster than CPUs or GPUs, enabling rapid training and inference for large AI models.

2. High Bandwidth Memory (HBM3E)

Ironwood includes advanced HBM3E memory directly attached to the TPU chip. This memory stores billions of model parameters and provides extremely high bandwidth compared to traditional RAM.

3. Large-Scale Connectivity

Google can link thousands of Ironwood chips together to form a unified AI supercomputer. This makes it possible to run very large models at high efficiency.

4. Improved Energy Efficiency

Ironwood provides greater performance per watt than previous TPU generations, reducing cloud operational costs and improving sustainability.

What Ironwood Is Used For

Ironwood is built to power the latest generation of AI technologies. Its key use cases include:

1. Running Large Language Models (LLMs)

Modern LLMs require tremendous computational power. Ironwood accelerates these models with low latency and high throughput.

2. High-Volume AI Inference

Inference refers to using a trained model. Ironwood is optimised for:

customer service automation

chatbots and conversational systems

recommendation engines

image and video generation

prediction and analytics tools

Ironwood excels in real-time, high-volume usage.

3. AI Agents and Reasoning Systems

Next-generation AI agents that perform reasoning, planning, and task automation rely heavily on fast inference. Ironwood provides that speed.

4. Enterprise AI Applications

Businesses can deploy:

productivity automation tools

workflow intelligence

cloud-based AI services

Ironwood powers these applications in Google Cloud with reliability and scale.

Benefits of Ironwood TPU

The Ironwood TPU introduces several major advantages for developers, enterprises, and cloud engineers.

1. Extremely High AI Performance

Ironwood delivers faster processing for large models, which means quicker responses and the ability to serve more users simultaneously.

2. Purpose-Built for Real-World AI Deployment

While many chips focus on training, Ironwood is optimised for inference, making it ideal for production environments where speed and cost efficiency matter.

3. Reduced Cost and Energy Consumption

Higher efficiency means lower cloud costs and smaller energy requirements for the same workload.

4. Massive Scalability

Ironwood supports large TPU pod configurations, enabling the creation of supercomputers capable of running the largest AI models available today.

5. Integrated with Google Cloud

Because Ironwood is cloud-based, developers do not need to manage physical hardware. They can deploy AI workloads directly through Google Cloud services.

Supports DevOps, MLOps, and DevSecOps Pipelines

Engineers can focus on:

deployment pipelines

monitoring

scaling

security

CI/CD for AI

rather than hardware maintenance.

Ironwood vs CPU vs RAM vs GPU

Component | Purpose | Relation to Ironwood |

CPU | General computing tasks | Not related |

RAM | System memory | Not used as application RAM |

GPU | Graphics and some AI processing | Similar in purpose but different design |

Ironwood TPU | AI acceleration | Dedicated AI hardware |

HBM | High-speed memory for TPUs | Attached to Ironwood for AI workloads |

Why Ironwood Matters for the Future of AI

Traditional computer hardware cannot keep up with the growing size and complexity of modern AI models. Ironwood solves these limitations by offering:

faster model performance

lower inference costs

reduced response time

higher user capacity

cloud-scale reliability

strong integration with enterprise AI platforms

For businesses and engineers, Ironwood represents the next generation of AI infrastructure.

Conclusion

Ironwood is a major leap forward in AI computing. It is not a CPU or RAM but a powerful, purpose-built TPU designed to handle the world’s largest AI models with exceptional speed and efficiency. As AI becomes more deeply integrated into business and daily technology, hardware like Ironwood will play a central role in powering that evolution.

If you are exploring cloud computing, DevOps, or AI infrastructure, understanding Ironwood and similar accelerators is essential. They represent the future foundation of large-scale AI systems.

Read more

Can you be more specific about the content of your article? After reading it, I still have some doubts. Hope you can help me. https://accounts.binance.info/en-ZA/register?ref=B4EPR6J0

Your article helped me a lot, is there any more related content? Thanks!

Your article helped me a lot, is there any more related content? Thanks! https://accounts.binance.info/bn/register?ref=WTOZ531Y

Trying my luck at mexok and the experience has been smooth. They seem to have their act together. Worth checking out in my opinion! Take a look: mexok

I don’t think the title of your article matches the content lol. Just kidding, mainly because I had some doubts after reading the article. https://accounts.binance.com/hu/register?ref=IQY5TET4

Finding the official 188bet login can be tricky, but this link works like a charm. Secure and straightforward. Get to betting quick! Access here: 188betlogin

Thank you for your sharing. I am worried that I lack creative ideas. It is your article that makes me full of hope. Thank you. But, I have a question, can you help me?

Thanks for sharing. I read many of your blog posts, cool, your blog is very good. https://accounts.binance.com/el/register?ref=DB40ITMB

Thank you for your sharing. I am worried that I lack creative ideas. It is your article that makes me full of hope. Thank you. But, I have a question, can you help me? https://accounts.binance.com/ph/register?ref=IU36GZC4

Thanks for sharing. I read many of your blog posts, cool, your blog is very good.

Thanks for sharing. I read many of your blog posts, cool, your blog is very good.

Your article helped me a lot, is there any more related content? Thanks! https://www.binance.info/es-MX/register?ref=GJY4VW8W

Alright, trying my hand at m88a2. Hope lady luck is on my side! Let’s do this! m88a2

Checking out 7mcnsport. Hope it’s got some good odds for tonight’s games! Fingers crossed! 7mcnsport

Time to check out b77bet and see what it’s all about. Maybe tonight’s the night I win big! Lets go! b77bet

I don’t think the title of your article matches the content lol. Just kidding, mainly because I had some doubts after reading the article.

Thank you for your sharing. I am worried that I lack creative ideas. It is your article that makes me full of hope. Thank you. But, I have a question, can you help me? https://accounts.binance.com/de-CH/register?ref=W0BCQMF1

Thanks for sharing. I read many of your blog posts, cool, your blog is very good.

Your point of view caught my eye and was very interesting. Thanks. I have a question for you.

Thank you for your sharing. I am worried that I lack creative ideas. It is your article that makes me full of hope. Thank you. But, I have a question, can you help me?

Thank you for your sharing. I am worried that I lack creative ideas. It is your article that makes me full of hope. Thank you. But, I have a question, can you help me? https://www.binance.info/el/register?ref=DB40ITMB

Can you be more specific about the content of your article? After reading it, I still have some doubts. Hope you can help me. https://accounts.binance.info/ru-UA/register?ref=JVDCDCK4

I don’t think the title of your article matches the content lol. Just kidding, mainly because I had some doubts after reading the article.