AI-Powered Hacking in 2025: The Rising Threat and How to Defend Against It

Introduction

Artificial Intelligence (AI) is revolutionizing industries—from healthcare to finance—by automating tasks, improving efficiency, and enhancing decision-making. However, as AI technology advances, cybercriminals are also leveraging it to launch more sophisticated and dangerous cyberattacks. By 2025, AI-powered hacking is expected to become more prevalent, making traditional cybersecurity measures less effective.

This article explores how hackers are using AI, real-world examples of AI-driven cybercrimes, and actionable steps individuals and businesses can take to protect themselves.

How AI is Fueling Cyberattacks

AI enhances cyberattacks by making them faster, more targeted, and harder to detect. Below are the key ways cybercriminals are using AI:

1. AI-Powered Phishing Attacks (The New Age of Scams)

Phishing attacks—where hackers trick victims into revealing sensitive information—are becoming more convincing thanks to AI.

How AI Enhances Phishing:

-

Personalized Scams: AI scans social media profiles, emails, and public records to craft highly believable messages.

-

Bypassing Spam Filters: AI modifies email wording and structure to evade detection by security software.

-

Voice & Video Cloning: AI can mimic a person’s voice or even generate deepfake videos to deceive victims.

Real-World Example:

In 2024, a finance employee at a multinational company received an email that appeared to be from the CEO, requesting an urgent wire transfer. The email was so well-written (thanks to AI) that the employee transferred $500,000 before realizing it was a scam.

2. AI-Driven Password Cracking (Breaking into Accounts in Seconds)

Hackers are using AI to crack passwords at an alarming speed.

How AI Cracks Passwords:

-

Analyzing Leaked Databases: AI studies millions of leaked passwords to predict common patterns.

-

Brute Force with Intelligence: Instead of random guesses, AI uses machine learning to try the most likely passwords first.

Real-World Example:

A cybersecurity firm tested an AI tool called “PassGAN” (Password Generative Adversarial Network). It cracked 51% of common passwords in under a minute, including passwords like “Password123” and “Admin@2023.”

3. Deepfake Social Engineering (The Rise of Digital Impersonation)

Deepfake technology uses AI to create fake videos, images, or audio that look and sound real. Cybercriminals use this for:

-

CEO Fraud: Impersonating executives to authorize fraudulent transactions.

-

Political Manipulation: Spreading fake news to influence elections.

-

Blackmail & Extortion: Creating fake compromising videos to extort money.

Real-World Example:

In 2023, a deepfake audio call impersonated a bank manager, instructing an employee to transfer $250,000 to an offshore account. The voice was so realistic that the employee complied without suspicion.

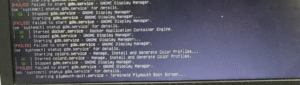

4. AI-Enhanced Malware (Smarter, Stealthier Viruses)

Traditional malware follows fixed patterns, making it easier to detect. AI-powered malware, however, can:

-

Adapt in Real-Time: Changes its behavior to avoid antivirus detection.

-

Learn from the Environment: Studies network traffic to find vulnerabilities.

-

Self-Replicate: Spreads silently without human intervention.

Real-World Example:

In 2024, an AI-powered ransomware attack infected a hospital’s network. The malware mimicked normal user activity for days before encrypting critical patient files and demanding a $2 million ransom.

5. AI-Controlled Botnets (The Next Generation of Cyber Warfare)

A botnet is a network of infected devices (like computers, cameras, and IoT gadgets) controlled by hackers. AI makes botnets more dangerous by:

-

Automating Attacks: Launching large-scale DDoS (Distributed Denial of Service) attacks without human input.

-

Self-Healing: If one part of the botnet is shut down, AI redirects the attack through other devices.

Real-World Example:

In 2023, an AI-controlled botnet consisting of millions of smart home devices crashed a major e-commerce website for 12 hours, causing $10 million in losses.

How to Protect Yourself from AI-Powered Hacking

For Individuals:

For Businesses:

The Future of AI in Cybersecurity: A Double-Edged Sword

While AI is empowering cybercriminals, it’s also being used to fight back:

-

Google’s AI Cybersecurity Tools predict and block threats before they strike.

-

Microsoft’s AI Defender detects zero-day exploits (previously unknown vulnerabilities).

-

IBM’s Watson for Cybersecurity analyzes millions of threats in seconds.

The key takeaway? AI is a powerful tool—it can be used for good or evil. Staying informed and adopting advanced security measures is the best defense.

Conclusion

AI-powered hacking in 2025 will be more sophisticated, but awareness and proactive security can mitigate risks. By understanding how hackers use AI and implementing strong protections, individuals and businesses can stay ahead of cybercriminals.